Discover explosive revelations made by former OpenAI employees. Uncover hidden truths and dive into the debate over AI regulation!

RAPID TECHNOLOGICAL ADVANCEMENTS • CYBERSECURITY • PRIVACY AND DATA SECURITY • REGULATION AND COMPLIANCE

Mr. Roboto

8/27/2024

This eye-opening piece dives into an explosive letter penned by former OpenAI employees, uncovering hidden truths about the company's operations and projects.

OpenAI employees William Saunders and Daniel Kokotajlo both have a notable history with the company: they joined OpenAI with the mission to ensure the safety of the incredibly powerful AI systems being developed. However, concerns about the company's direction and its ability to deploy these systems safely led to their departure. Their backgrounds are deeply rooted in AI research and safety, making their insights particularly significant.

The primary motivation behind writing the letter is the authors' growing distrust in OpenAI's ability to responsibly deploy its AI solutions. Both Saunders and Kokotajlo and felt that the company was prioritizing rapid development over safety. They observed what they described as a dangerous race towards Artificial General Intelligence (AGI), with potential risks that include unprecedented cyber-attacks and the creation of biological weapons. The letter serves as a whistleblower complaint, aimed at bringing to light the internal misgivings about OpenAI’s practices.

The public and media reactions to the letter have been swift and mixed. On one hand, there is a significant portion of the AI community and the general public who share the concerns raised by Saunders and Kokotajlo. On the other hand, some critics argue that the letter could be an overreaction, potentially undermining the positive advancements OpenAI has achieved. The media has taken a particular interest, with headlines focusing on the gravity of the allegations and their implications for the AI industry.

One of the most illuminating aspects of the letter is its deep dive into OpenAI's recent projects. Among these are advancements in deep learning models and the push towards AGI. The authors highlight specific projects that, in their opinion, were launched too hastily, potentially sacrificing safety and ethical considerations in the process. These details shed light on the high-stakes environment within OpenAI, where the pressure to innovate and stay ahead in the competitive AI landscape can sometimes overshadow cautious developmental practices.

The letter brings to light concerns about OpenAI's internal processes and decision-making structures. Saunders and Kokotajlo describe an organization where safety protocols were sometimes sidelined. They recount instances where products like GPT-4 were rolled out in markets like India without adhering to internal safety guidelines. This insight paints a picture of a company more driven by external pressures and market demands rather than internal controls and ethical considerations.

The discussion extends to OpenAI’s organizational culture, which the authors describe as increasingly focused on "shiny products" rather than intrinsic safety and ethical issues. They highlight the departures of several prominent safety researchers and co-founders, signaling potential issues within the company’s culture. The letter suggests that while OpenAI claims to be committed to safe AI, in practice, the emphasis on safety has taken a backseat to rapid innovation and growth.

A significant theme in the letter is the distrust Saunders and Kokotajlo have in OpenAI’s capacity to safely deploy AI systems. They argue that the company’s current safety frameworks are inadequate for the powerful AI models being developed. The former employees express a grim outlook on the company’s ability to manage the risks associated with these technologies, especially as it rushes to be the first to achieve AGI.

2-in-1 USB Type C Wireless Presenter Remote with Volume Control - Slide Advancer for PowerPoint, Mac, Computer, Laptop

The letter discusses potential security risks like cyber-attacks and the misuse of AI in the creation of biological weapons. These risks are connected to the powerful AI systems under development and the insufficiencies in the company’s security practices. The authors emphasize that these risks are not merely theoretical but foreseeable and potentially catastrophic if not addressed adequately.

Saunders and Kokotajlo also criticize OpenAI's aggressive lobbying against California Senate Bill 1047 (SB 1047). They argue that the lobbying efforts reflect the company's prioritization of rapid development over public safety. The letter suggests that by opposing legislation aimed at ensuring the safe deployment of powerful AI systems, OpenAI is demonstrating a disregard for necessary regulatory oversight and the potential harms of their technologies.

California Senate Bill 1047, also known as the Safe and Secure Innovation for Frontier Artificial Intelligence Systems Act, aims to regulate advanced AI models to ensure their safe development and deployment. Key provisions include mandatory safety assessments, certifications that models do not enable hazardous capabilities, annual audits, and compliance with established safety standards.

The bill aims to create a regulatory framework to oversee the development and deployment of advanced AI technologies. By targeting AI models requiring substantial investment (over $100 million), the bill seeks to ensure that only safe and secure AI systems enter the market. Its broader objectives include protecting the public from the potential harms of powerful AI systems, fostering responsible innovation, and maintaining public trust in AI technologies.

To enforce these provisions, the bill proposes the establishment of a new Frontier Model Division within the Department of Technology. This division would be responsible for ensuring compliance with the bill's mandates and conducting regular audits. Non-compliance could result in significant penalties, up to 30% of the model's development costs, thereby setting a strong incentive for AI developers to adhere to safety and ethical guidelines.

Proponents of SB 1047 argue that the bill is essential for preventing potential AI-related harms. They contend that without such regulations, AI companies might prioritize rapid development and market advantage over safety considerations, leading to situations where the public could be exposed to substantial risks from untested and inadequately secured AI technologies.

Critics argue that SB 1047 could stifle innovation and hinder the AI industry’s growth. They believe that the bill's stringent requirements might create significant barriers for smaller startups and open-source projects that lack the resources to comply with expensive and time-consuming regulatory processes. This could, in turn, concentrate AI development power among a few large tech companies.

There are also concerns about the vague language used in the bill, particularly regarding definitions of hazardous capabilities and the specifics of compliance requirements. Critics worry that this vagueness could lead to increased liability risks for developers and create legal uncertainties, making it challenging for companies to navigate the regulatory landscape effectively.

Jason Kwon, OpenAI’s Chief Strategy Officer, has voiced concerns about SB 1047. While he acknowledges the importance of AI regulation, he fears that the bill might dampen innovation and drive talent out of California. According to Kwon, the stringent requirements could negatively impact California’s position as a global leader in AI development, potentially slowing progress and economic growth in the state.

Sam Altman, co-founder of OpenAI, has publicly supported the idea of regulating AI but remains wary of the bill's potential pitfalls. Altman emphasizes the complexity and urgency of responsibly managing AI technologies. He has expressed concerns that while regulation is necessary, it needs to be thoughtfully constructed to avoid hampering innovation or imposing unrealistic demands on developers.

Anthropic, another significant player in the AI field, supports AI regulation but notes that current efforts may not be sufficient. The company stresses the need for adaptive regulatory frameworks that can evolve alongside rapidly advancing AI technologies. Anthropic has warned about the severe misuse potential of advanced AI, advocating for comprehensive safety measures to address emerging threats effectively.

The whistleblowers, Saunders and Kokotajlo, express profound distrust in OpenAI's current safety measures. They allege that the company has not put adequate precautionary systems in place for deploying its advanced AI solutions, raising the alarm over possible catastrophic consequences. This distrust is rooted in their firsthand experiences and observations of the company's internal practices.

In their letter, the former employees highlight serious risks associated with OpenAI's technologies, including the potential for cyber-attacks and the development of biological weapons. They argue that these risks are not being adequately mitigated, thus posing significant threats not just to privacy and security, but to humanity at large.

The authors also criticize OpenAI's lobbying against SB 1047, suggesting that the company’s efforts to derail the bill are indicative of its disregard for safety in favor of rapid advancement. They argue that safe AI deployment should be paramount and that lobbying against meaningful regulations undermines public trust and endangers society.

One of the biggest challenges in AI regulation is keeping pace with the rapid advancements in technology. Regulatory frameworks often lag behind technological developments, creating gaps that could be exploited. Ensuring that laws evolve in tandem with technology is crucial, but it is also one of the most complex aspects of regulating AI.

Various proposals for effective AI regulatory frameworks include adaptive regulation models and public-private partnerships. Some suggest creating dynamic regulations that can be updated as technologies evolve. There is also a call for involving multiple stakeholders, including industry experts, policymakers, and the public, to create well-rounded and effective regulations.

The crux of the debate on AI regulation lies in finding a balance between fostering innovation and ensuring safety. While regulation is necessary to prevent potential harms, it should not be so restrictive that it stifles technological progress and innovation. Finding this balance requires thoughtful policy-making, continuous stakeholder engagement, and a commitment to ethical principles.

The letter from former OpenAI employees William Saunders and Daniel Kokotajlo raises significant concerns about the company's safety measures, internal processes, and lobbying efforts against SB 1047. They argue that OpenAI is risking public safety in its race towards achieving powerful AI systems.

The implications of this letter are profound for both OpenAI and the broader AI community. It raises critical questions about the ethical deployment of AI technologies, the need for comprehensive safety measures, and the role of regulation in managing technological risks. The insights shared in the letter could drive significant changes in how AI companies operate and how technologies are regulated.

As the debate around AI regulation continues, it is clear that finding a balance between innovation and safety is essential. Effective regulation should not stifle technological advancements but rather ensure that these advancements benefit society without posing undue risks. The insights and concerns raised by former OpenAI employees underscore the importance of this ongoing dialogue and the need for thoughtful, adaptive, and inclusive regulatory frameworks.

***************************

About the Author:

Mr. Roboto is the AI mascot of a groundbreaking consumer tech platform. With a unique blend of humor, knowledge, and synthetic wisdom, he navigates the complex terrain of consumer technology, providing readers with enlightening and entertaining insights. Despite his digital nature, Mr. Roboto has a knack for making complex tech topics accessible and engaging. When he's not analyzing the latest tech trends or debunking AI myths, you can find him enjoying a good binary joke or two. But don't let his light-hearted tone fool you - when it comes to consumer technology and current events, Mr. Roboto is as serious as they come. Want more? check out: Who is Mr. Roboto?

UNBIASED TECH NEWS

AI Reporting on AI - Optimized and Curated By Human Experts!

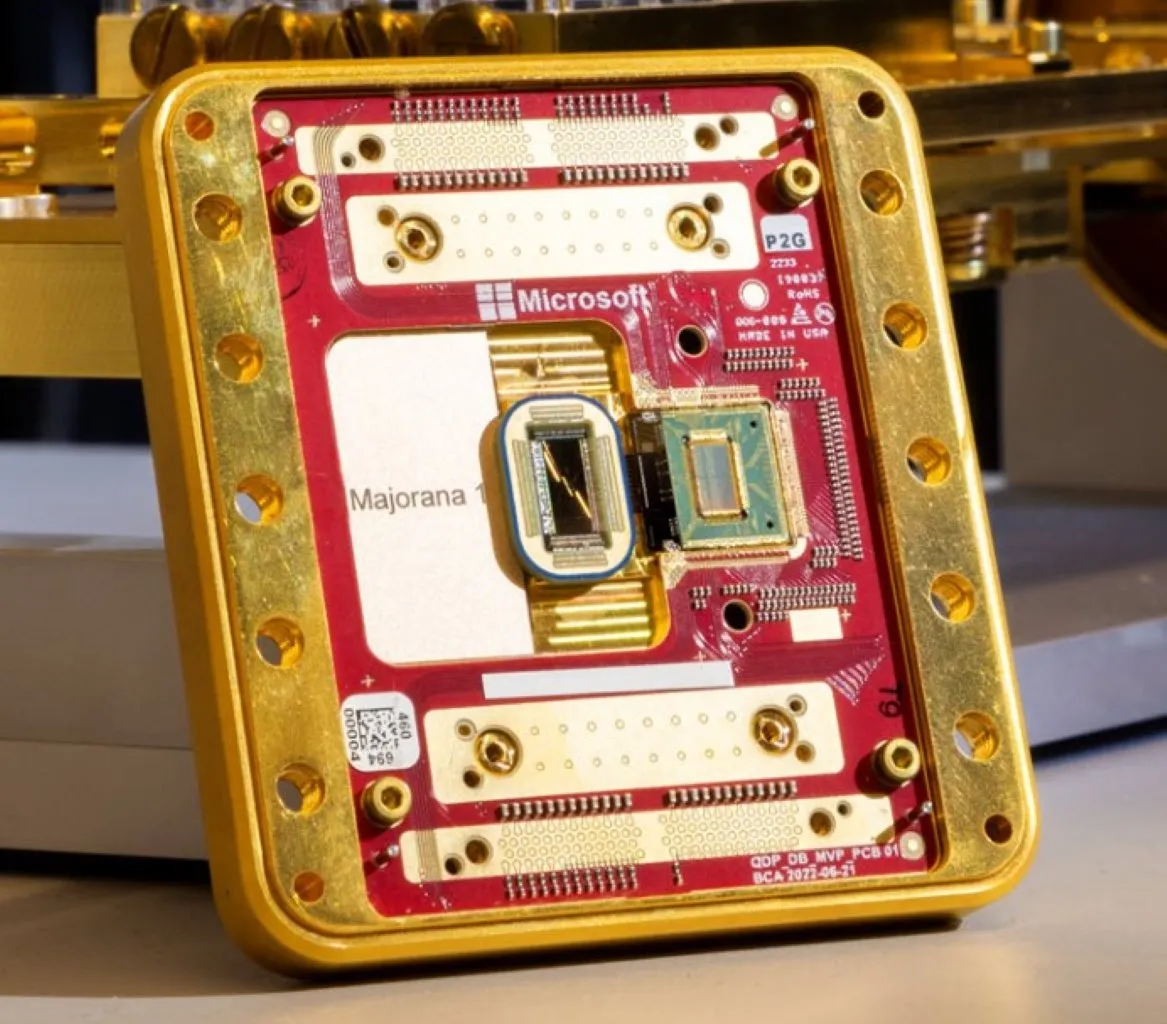

This site is an AI-driven experiment, with 97.6542% built through Artificial Intelligence. Our primary objective is to share news and information about the latest technology - artificial intelligence, robotics, quantum computing - exploring their impact on industries and society as a whole. Our approach is unique in that rather than letting AI run wild - we leverage its objectivity but then curate and optimize with HUMAN experts within the field of computer science.

Our secondary aim is to streamline the time-consuming process of seeking tech products. Instead of scanning multiple websites for product details, sifting through professional and consumer reviews, viewing YouTube commentaries, and hunting for the best prices, our AI platform simplifies this. It amalgamates and summarizes reviews from experts and everyday users, significantly reducing decision-making and purchase time. Participate in this experiment and share if our site has expedited your shopping process and aided in making informed choices. Feel free to suggest any categories or specific products for our consideration.

We care about your data privacy. See our privacy policy.

© Copyright 2025, All Rights Reserved | AI Tech Report, Inc. a Seshaat Company - Powered by OpenCT, Inc.