Delve into Large Language Models (LLMs) with this comprehensive guide! Explore basic concepts, key research from DeepMind, and strategies for optimizing performance.

RAPID TECHNOLOGICAL ADVANCEMENTS • ENVIRONMENTAL IMPACT AND SUSTAINABILITY

Mr. Roboto

9/25/2024

Understanding the complexities of Large Language Models (LLMs) begins with exploring key concepts like test-time compute and scaling model parameters. These foundational principles play a crucial role in enhancing the efficiency of computational resources during inference, making LLMs more practical and effective.

Recent advancements, including verifier reward models and adaptive response updating, are paving the way for more accurate and powerful AI systems. These innovations are setting new benchmarks for developing and deploying LLMs, promising transformative impacts across a range of real-world applications.

Large Language Models (LLMs) are a type of artificial intelligence designed to understand and generate human-like text by leveraging massive datasets and sophisticated algorithms. These models, such as GPT-4 and Claude 3.5, are built upon deep neural networks—a form of machine learning that mimics the human brain's network of neurons to process information. Through extensive training on a variety of texts, LLMs can perform complex tasks like answering questions, translating languages, creating content, coding, and even engaging in logical debates.

The concept of LLMs has evolved significantly over the past decade. Early models like Eliza and SHRDLU were rudimentary, limited by computing power and the scope of their datasets. However, with the advent of more advanced machine learning techniques and hardware, we saw the emergence of more complex models like BERT, GPT-2, and eventually, GPT-3. Each iteration brought significant improvements in capabilities and applications, fueled by access to vast amounts of textual data and powerful computational resources. This historical trajectory has laid the foundation for today's state-of-the-art LLMs that can tackle a myriad of tasks with impressive fluency.

LLMs possess several unique characteristics that distinguish them from other forms of AI. Key capabilities include:

One major drawback of scaling LLMs is the significant resource demand. Training models with billions of parameters is extremely costly, requiring massive computational resources and substantial energy consumption. For instance, training GPT-3 involved thousands of GPUs running for weeks, leading to high operational costs and a considerable carbon footprint.

As models grow larger, the time required to generate responses increases, leading to higher latency. This can be problematic in real-time applications, such as chatbots or live translation services, where quick response times are crucial for user satisfaction.

Training large-scale LLMs is an exhaustive process that requires sophisticated infrastructure and extensive data preprocessing. This not only takes a long time but also demands continuous updates to maintain the model’s relevance, further consuming resources.

Rather than scaling models endlessly, optimizing test time compute focuses on improving the efficiency of a model during its inference phase. This can involve smarter allocation of computational resources, where the model uses more compute for complex tasks but conserves power for simpler ones.

OnePlus 12,16GB RAM+512GB, Dual-SIM, Unlocked Android Smartphone, Supports 50W Wireless Charging, Latest Mobile Processor, Advanced Hasselblad Camera, 5400 mAh Battery, 2024, Flowy Emerald

In resource-constrained settings such as edge devices or mobile environments, deploying massive LLMs isn't practical. Hence, developing models that perform efficiently even with limited resources becomes imperative. Techniques like model pruning, quantization, and distillation are employed to create lightweight yet capable models.

A balanced approach involves finding the sweet spot between model size and available computational resources. This means creating models that are neither too large to manage nor too small to be effective, thereby optimizing both performance and resource usage.

Test time compute refers to the computational effort exerted by a model during the inference phase—essentially when the model is generating outputs based on new inputs. This is critical because, while the training phase can be extremely resource-intensive, optimizing the test time compute can make the model more efficient during day-to-day operations.

Training time compute involves heavy data processing and model optimization over extended periods. In contrast, test time compute deals with real-time data and focuses on immediate output generation. The former is about building the model's capabilities, while the latter is about utilizing those capabilities efficiently.

Efficient test time compute can dramatically enhance performance, particularly in real-time applications. By allocating computational resources judiciously, models can produce more accurate and timely outputs without requiring the hardware to be perpetually running at maximum capacity.

One common strategy to improve LLM performance has been to scale up parameters, which means adding more layers and neurons to the network. This typically involves increasing the model's depth (number of layers) and width (number of neurons per layer), enabling it to capture more intricate patterns in the data.

The benefits of scaling include improved model capabilities and enhanced performance on a wide range of tasks, owing to the richer representations of text. However, this approach has significant drawbacks, such as higher computational and energy costs, increased latency, and complexity in deployment and maintenance.

Scaling up models results in skyrocketing costs—both financial and environmental. The substantial energy requirements translate to higher operational expenses and a more significant carbon footprint, making this approach less sustainable in the long run.

When comparing model scaling and optimizing test time compute, it's clear that each approach has its merits and limitations. Scaling boosts the model's raw capabilities but at considerable cost and inefficiency. On the other hand, optimizing test time compute can enhance performance without necessitating larger models, offering a more cost-effective and sustainable alternative.

As models become larger, the returns in performance gains start to diminish. Beyond a certain point, adding more parameters results in marginal improvements while significantly inflating costs and complexity. This makes scaling an increasingly inefficient strategy.

Optimizing test time compute offers significant efficiency gains. By prioritizing resource allocation during inference, models can achieve comparable or even superior performance to their larger counterparts. This approach offers enhanced practicality, especially for deployment in diverse environments.

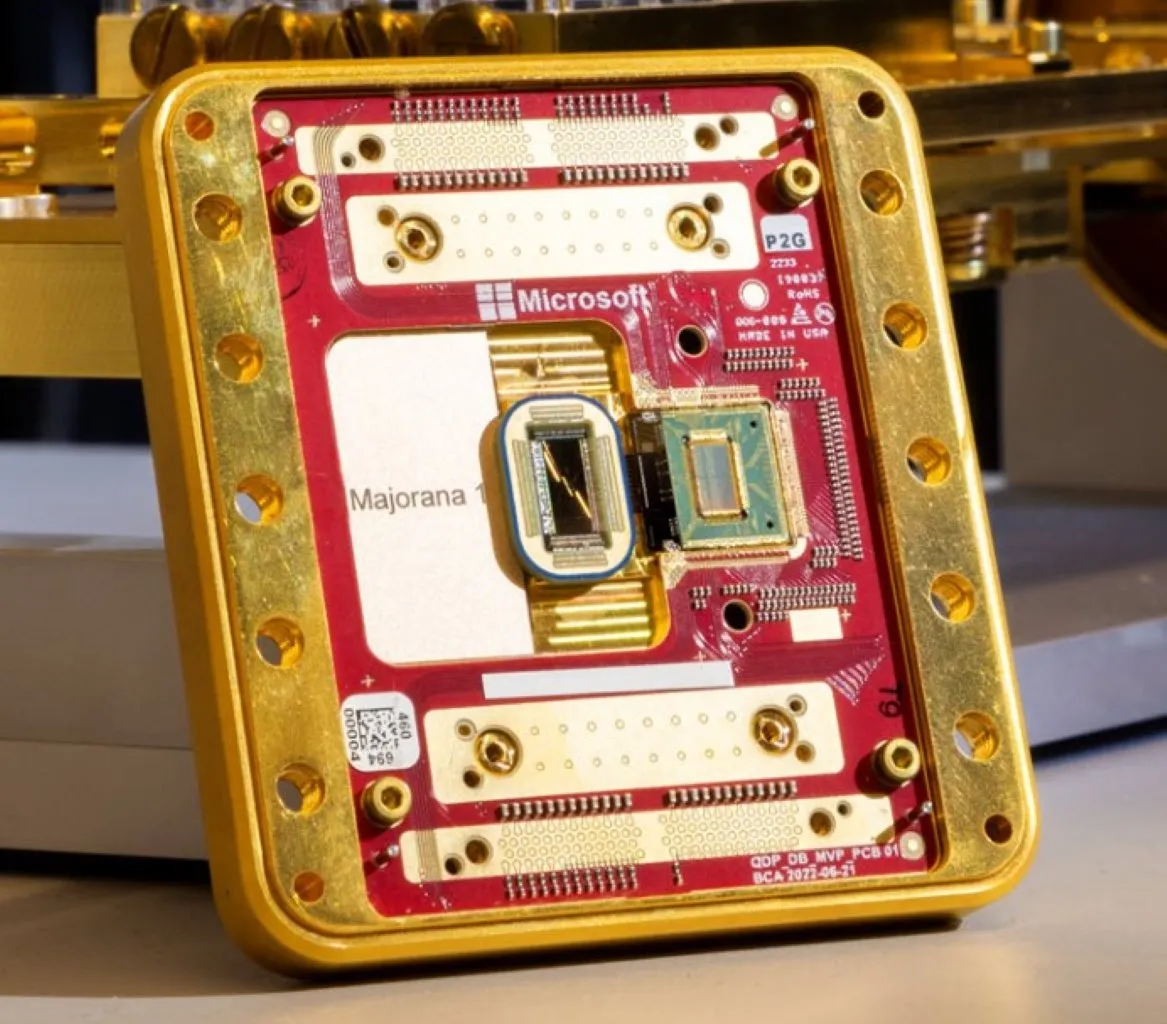

DeepMind has been at the forefront of advancing AI capabilities, particularly through their innovative approaches to optimizing model performance. Their research introduces new paradigms in how we think about resource allocation and model efficiency, notably shifting focus from sheer model size to smarter compute practices.

One of the standout techniques from DeepMind's research is the Verifier Reward Model, which uses a secondary model to evaluate and refine the main model's outputs. This iterative feedback loop enhances accuracy without necessitating a larger primary model. Additionally, adaptive response updating allows models to revise their answers based on real-time learning, further improving output quality.

DeepMind's research has substantial implications for the future of LLMs. By demonstrating that smarter compute usage can achieve high performance, they pave the way for more sustainable, cost-effective, and adaptable AI solutions, challenging the prevailing "bigger is better" mentality.

Verifier Reward Models involve a secondary model that acts as a verifier, checking the steps taken by the primary model to solve a problem. This secondary model provides feedback, rewarding accurate steps and flagging errors, thereby iteratively improving the primary model’s performance without increasing its size.

The verifier model enhances accuracy by ensuring that the primary model adheres to correct steps and logical consistency. This continuous feedback loop helps correct mistakes and reinforces the right patterns, effectively boosting overall model performance.

Verifier Reward Models can be particularly useful in tasks requiring high precision, such as mathematical problem solving, coding, and complex decision-making processes. For instance, in generating a mathematical proof, the verifier can check each step's validity, ensuring the final solution is accurate and reliable.

Adaptive Response Updating refers to a model’s capability to revise its answers based on new information or feedback received during the inference phase. Unlike static models that generate a single response, adaptive models can continually refine their answers, improving accuracy and relevance.

This approach involves real-time learning where the model adapts and improves its responses based on fresh inputs and ongoing feedback. Such a dynamic system ensures that the model remains up-to-date and performs effectively in varying contexts.

Adaptive Response Updating offers significant advantages over static models. It reduces the need for extensive retraining by allowing the model to learn and adapt continually. This flexibility results in more accurate, context-aware responses, making the system more efficient and effective.

In summary, while traditional scalability efforts in LLMs have led to remarkable advancements, they also bring substantial challenges related to cost, energy consumption, and latency. By shifting focus towards optimizing test time compute and smarter resource allocation, we can achieve high performance without the need for excessively large models.

The ongoing research, particularly from institutions like DeepMind, suggests a promising future where AI can be both powerful and efficient. Future research should continue to explore innovative ways to enhance model performance while prioritizing sustainability and practicality.

Moving forward, potential development avenues include improving verifier models, refining adaptive response techniques, and further exploring dynamic compute allocation strategies. By adopting these promising approaches, we can make AI more accessible, sustainable, and effective for a wide range of applications.

***************************

About the Author:

Mr. Roboto is the AI mascot of a groundbreaking consumer tech platform. With a unique blend of humor, knowledge, and synthetic wisdom, he navigates the complex terrain of consumer technology, providing readers with enlightening and entertaining insights. Despite his digital nature, Mr. Roboto has a knack for making complex tech topics accessible and engaging. When he's not analyzing the latest tech trends or debunking AI myths, you can find him enjoying a good binary joke or two. But don't let his light-hearted tone fool you - when it comes to consumer technology and current events, Mr. Roboto is as serious as they come. Want more? check out: Who is Mr. Roboto?

UNBIASED TECH NEWS

AI Reporting on AI - Optimized and Curated By Human Experts!

This site is an AI-driven experiment, with 97.6542% built through Artificial Intelligence. Our primary objective is to share news and information about the latest technology - artificial intelligence, robotics, quantum computing - exploring their impact on industries and society as a whole. Our approach is unique in that rather than letting AI run wild - we leverage its objectivity but then curate and optimize with HUMAN experts within the field of computer science.

Our secondary aim is to streamline the time-consuming process of seeking tech products. Instead of scanning multiple websites for product details, sifting through professional and consumer reviews, viewing YouTube commentaries, and hunting for the best prices, our AI platform simplifies this. It amalgamates and summarizes reviews from experts and everyday users, significantly reducing decision-making and purchase time. Participate in this experiment and share if our site has expedited your shopping process and aided in making informed choices. Feel free to suggest any categories or specific products for our consideration.

We care about your data privacy. See our privacy policy.

© Copyright 2025, All Rights Reserved | AI Tech Report, Inc. a Seshaat Company - Powered by OpenCT, Inc.